Troubleshooting Xdebug remote debugging sessions

As I've mentioned previously, remote debugging is one of the greatest features offered by Xdebug. Remote debugging allows you to open your website on your browser, which initiates a step by step debugger on your preferred IDE.

In theory, setting up a remote debugging session should be as simple as following these steps:

- Install the Xdebug extension in PHP, e.g.

pecl install xdebug - Enable the extension in your php.ini, e.g. add the following to your php.ini:

zend_extension="xdebug.so" - Enable the remote debugging in your php.ini configuration, e.g.

xdebug.remote_enable = 1 - Make your IDE to listen to remote debugging connections

- Start the remote debugging session in your browser using an addon or by adding

?XDEBUG_SESSION_START=my_sessionto the URL.

Sometimes things are not quite as simple. There have been plenty of times when I've had to figure out why remote debugging wasn't working on my own development environment or had to help others to figure out why it wasn't working for them.

In this article, I hope to go through some of the common issues that I've encountered when setting up Xdebug and how you can detect and solve these issues.

Making sure Xdebug is enabled

The first thing you absolutely want to make sure that the Xdebug extension is actually enabled. You also want to do this on your web page. Even though you might see a line in php -v output indicating that Xdebug is enabled, it doesn't mean that your server has loaded the extension.

The easiest way to check this is to create a page that simply contains the following piece of code:

<?php phpinfo();

Usually, it's easiest to replace whatever you have as your application entry point (e.g. index.php) with this.

This piece of code will display your server PHP configuration and all relevant information. To find out if you have Xdebug extension enabled, just search for the page for xdebug. If you can't find the appropriate section in your info page, it means the extension has not been loaded.

There are a number of reasons why Xdebug might have not been loaded. Here are some common issues that you should check:

- Ensure that the extension is loaded via the php.ini file loaded by the server. Use the "Loaded Configuration File" in your

phpinfo()to verify that you added thezend_extensiondirective to the correct ini file (or any other ini file indicated by thephpinfo()). - Make sure you actually restarted your server. I would recommend stopping your server and using something like

sudo lsof -nPi|grep httpdto ensure that no server process is actually running. There have been times when I've actually had to manually kill server processes in order to restart it because none of the command line commands worked. - Check your server error logs to see if there have been problems trying to load the extension. It's possible, for example, that your

extension_dirsetting is incorrect, which makes PHP unable to find the extension.

Check your Xdebug configuration

Once you've verified in phpinfo() that you actually have the Xdebug extension loaded, but remote debugging is still not working, it's time to check your configuration next. Remember, that you should always use phpinfo() to ensure that any configuration change you make is reflected on the server.

The three most important configuration values are xdebug.remote_enable, xdebug.remote_host and xdebug.remote_port.

The value of xdebug.remote_enable should be "1". Otherwise, the remote debugging simply isn't enabled.

The value of xdebug.remote_host should be where ever you want you Xdebug to connect to. If you're just using a local machine for development, it's usually just "localhost". However, if you're using a virtual machine you may need to make sure that this points to whatever address your virtual machine uses to connect to your own computer. This may vary on the type of virtual machine and the operating system you are using.

The values of xdebug.remote_port should be the same port that your IDE is configured to listen. Make sure, however, that the Xdebug is not configured to use any port that is already being listened by some other software. For example, the default port 9000 used by Xdebug is also the default port of php-fpm. Once you enable the listening on your IDE, you should use the command line to verify that your IDE is actually listening to that port,

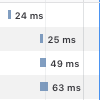

For example, you should see something like the following:

$ sudo lsof -nPi|grep 9000

phpstorm 2755 riimu 380u IPv6 0xd613775cd0f8575f 0t0 TCP *:9000 (LISTEN)

If you are using Xdebug on a remote host rather than the local machine or a local virtual machine, you may be able to enable xdebug.remote_connect_back configuration, which makes Xdebug connect to the connecting machine, instead of using the xdebug.remote_host configuration.

Enable remote debug logging

Now that you have verified that Xdebug is both enabled and hopefully configured properly, but still nothing happens, you should try enabling logging for the remote connections.

Xdebug offers a configuration option xdebug.remote_log to log all remote debugging sessions to a file. If you want to enable logging, make sure this option points to a writable file. If you're using something like /var/log/xdebug.log, make sure you also chmod the file appropriately so that your server can actually write into the file, e.g.

$ sudo touch /var/log/xdebug.log

$ sudo chmod a+rw /var/log/xdebug.log

If you can see Xdebug write into this log file when you reenable remote debugging but nothing happens, you should be able to get hints why it isn't working. For example, if Xdebug cannot connect to the listening IDE, you might see something like this:

Log opened at 2019-02-20 12:46:44

I: Connecting to configured address/port: localhost:9000.

W: Creating socket for 'localhost:9000', poll success, but error: Operation now in progress (19).

W: Creating socket for 'localhost:9000', poll success, but error: Operation now in progress (19).

E: Could not connect to client. :-(

Log closed at 2019-02-20 12:46:44

If the Xdebug cannot connect, again make sure the following:

- Make sure that

xdebug.remote_hostpoints to your machine. Take particular care if you're using virtual machines to use the correct address or hostname for your local machine rather than the virtual machine. - Make sure that your IDE is configured properly and listening to the port configured in

xdebug.remote_port. Take particular care to ensure that no other application is listening to the same port. - Don't forget to also ensure that your server can actually connect your local machine, e.g. make sure the port you are using isn't closed or blocked by firewalls or routers.

Make sure the remote debugging session is started

If you enabled the remote debugging logging and made sure the log file is writable (and ensured these configurations are correct via phpinfo()), but you're not seeing anything in the log file, the remote session itself might not be getting started.

The first thing you can try is to enable to xdebug.remote_autostart configuration. This will make Xdebug always start a remote debugging session regardless of what the browser is telling. This can be easy to way ensure that at least the connection should work, but the session isn't just getting started. Most of the time, however, I would not recommend leaving this option on.

If you can get the remote debugging working by enabling that option, but not without it, the problem may be with your browser. Remote debugging sessions can be started by either setting the URL parameter XDEBUG_SESSION_START or the cookie XDEBUG_SESSION. I highly recommend using an add-on for this purpose. Sometimes, however, the add-ons may be a bit wonky so it may be a good idea to use the network console in your browser to ensure that the appropriate GET/POST/COOKIE variables have been sent in the request.

If you're trying to trigger the remote sessions via GET/POST variable, it may not behave as you expect with AJAX requests. The same can also apply to COOKIE values provided by add-ons.

Configuring break points appropriately

One problem that I've occasionally encountered (particularly with PHPStorm) is that everything else might work correctly, but it doesn't seem like you are reaching the appropriate breakpoints in your code.

There are two distinct common issues that you may be facing.

First is the fact that your IDE does not know how the remote file paths map in your local development environment. This typically happens if you're using virtual machines or remote servers. To fix this, you need to set up appropriate path mappings for your IDE.

In PHPStorm, you can find the setting hidden in "Preferences -> PHP -> Servers -> Use path mappings". Usually, you just need to make sure the project root maps to the project root on the server.

The second issue is that breakpoints may be placed on lines that cannot break. For example, you cannot insert breakpoints into empty lines, because PHP does not generate breakable opcodes into those lines. If your code does not seem to be able to reach your breakpoints, it may be a good idea to set your IDE to break on the first line, e.g. "Run -> Break at first line in PHP Scripts" and proceed from there to see your code behaves.

Debugging multiple connections

Last, but not least, you may occasionally run into scenarios where, for example, a PHP scripts calls another PHP scripts via an URL or calling it externally. By default, IDEs like PHPStorm may be configured to listen to only one connection and debugging session will halt because Xdebug will try to open another debugging connection.

In PHPStorm, this setting is governed by "Preferences -> PHP -> Debug -> Max. simultaneous connections". You will need to set this to more than 1 if you expect your script to make other external calls to other PHP scripts will it is running. This may be particularly typical if you have some kind of micro-services or scheduled tasks.

Be thorough, be certain

I've now gone through some of the most common pitfalls when it comes to configuring Xdebug and setting up the remote debugging. It's a powerful feature, but sometimes it may take a bit of work to get it working. It's always definitely worth it, however. Once you start using a step by step debugger, going back to basic var_dump() methodology seems very unpleasant.

If there is one thing that you should try to remember from this guide, is that always make sure your changes are applied in phpinfo(), use command line tools like lsof to make sure things are behaving as you expect them to and rely on log files to find detailed information.

While this guide mostly applies to Unix like operating systems, using phpinfo() and log files are also incredibly valuable tools on Windows. I know they've helped me to solve a lot of strange issues.

Edit 2019-02-25: Added a reminder to check that the port is not closed, as was pointed out.